Thank you for reading my first article. I wish to write this article and any future ones without any bias unless specified. I would like you to know that English isn't my first language, so I may make mistakes, but hopefully you will be able to understand my point. If you find any mistakes (grammar or factual) please read the notes at the bottom and kindly correct me. Please do not repost my article under any circumstance without my permission.

I. Before electronical computers

It was a job to be a computer. The first known written refrence dates from 1613. Often women were working this job. Women were working this job because the men were at war (1).

II. The beggining of electronic computers

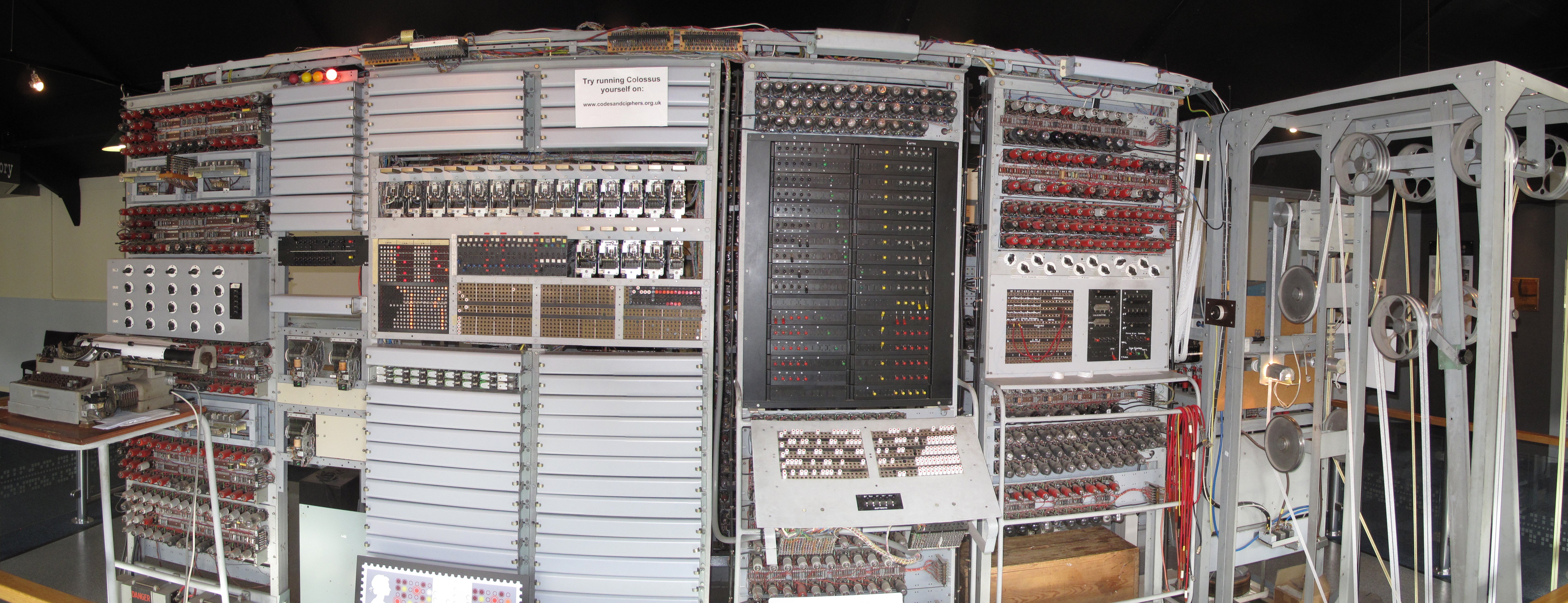

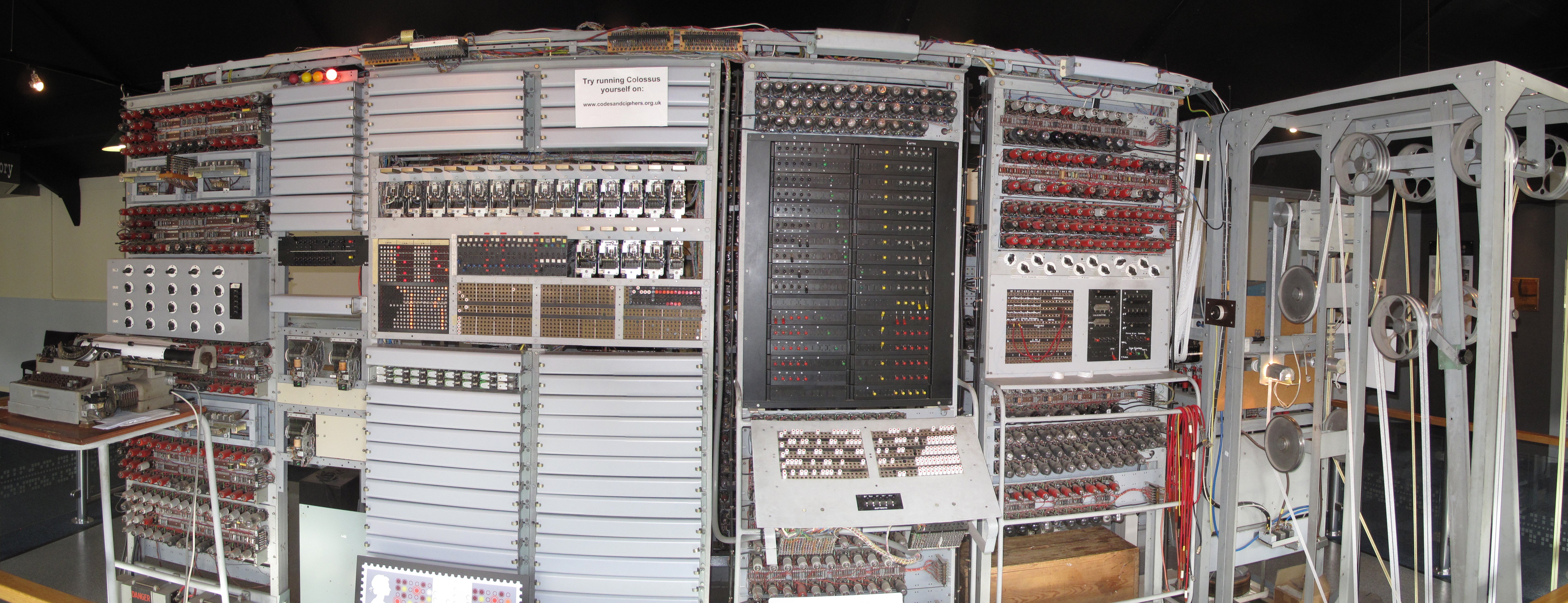

The first electronic digital programmable computer was the Colossus computer. The Mk 1 was released on December 1943. This computer was operated by the Women's Royal Naval Service (Wren) and engineers who were on hand for meintenance and repair. By the end of the war the staffing was 272 Wrens and 27 men. (2)

This computer was not turing complete and it was not general-purpose and it used paper bands, but it was the first digital, fully electronic and with programability and it is interesting that women operated it. As a matter of fact, in 1984, 37.1% of computer science degrees were awarded to women (3).

II. Personal computers

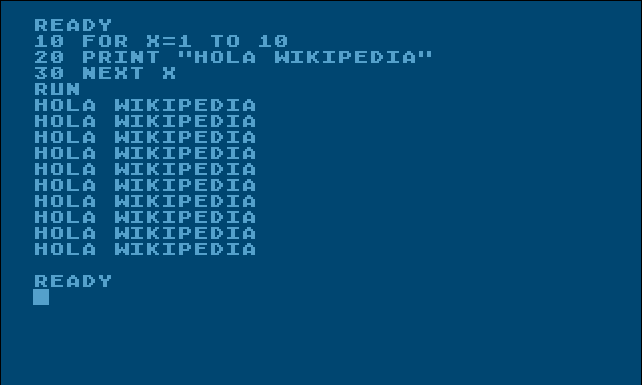

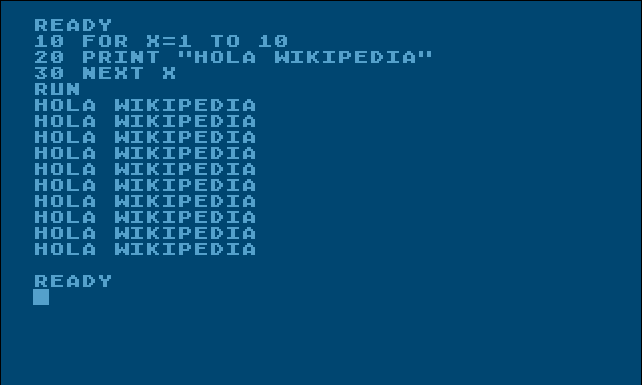

Personal computers became popular in 1980(4). Not very many people (who weren't studying computers) were writing software and that is because the choice of easy to use/learn programming languages was small (Basic, Fortan, etc.) and you couldn't do a whole lot with them, so most software was written in low-level which is difficult to learn for a person who doesn't have a teacher because online tutorials and resources (forums like stackoverflow, github or online docs) weren't available like today (for example if you don't understand something from a Basic book you have no one to ask). In these years, people mostly consumed software developed by teams of programmers or by highly invested individuals.

But as the internet goes public (1993) and computers became more powerful we come to the next point...

III. First mainstreaming of programming

I believe what caused the downfall were high level programming languages. Because high level languages made it very easy to create apps, people who may have opted for something more low-level and more efficient opt instead for a high level language because it is easier. This might sound good, right? Because more people get into programming and everyone makes apps. But I believe this is poison. If everyone learns only high level programming languages, who will be mentaining the language itself? We won't get to the level where absolutely nobody knows low-level languages, but we're already seeing the effects of less people learning low-level languages: slow and buggy software, needing to upgrade CPU or GPU to run basic software (for example Windows 11 and Discord) and many many more people getting into Computer Science where they're learning Python or Javascript (5). And also, at this point (1998) the percentage of women who were awarded Computer Science degrees is 26.7% (3).

I also mentioned the internet. I believe web pages languages (HTML, CSS, JS) were made without much thought of usabilty, code design, performance and coeherence. They were developed by different people and somehow brought togheter with some standards. All that web browser developers did was to follow those standards without thinking if they're any good. Web page languages forced everyone who wants to make web pages (or apps, more recently) to use high level languages and it truly made making apps very easy for a usual hobbyist because of online resources (of course not to the same level as today but still), forums, communities and lower barrier of entry (literally just a text editor). So this leads us to the next point...

IV. Mainstreaming among programming everyone

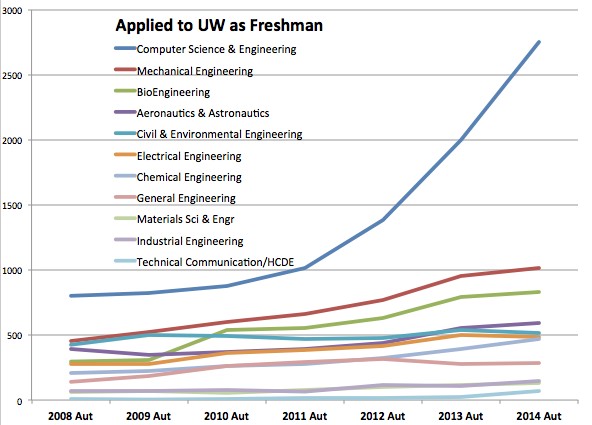

The number of developers is increasing fast. Fast and with dollar signs in their eyes. Developers for high-level programming languages are in high demand because they're the fastest, most available and in turn make the most money. Low-level language programmers may choose to leave their current job because they could program in high-level languages and get paid much more. People who previously had no interest in computers now suddenly do because the salary is so big. These people make software (usually web pages or servers) with no passion or any interest other than money and as such they create Soulless Software. Unmaintainable, unreadable, inefficient code which works for one purpose and has to be completely rewritten when the person who wrote it leaves because nobody can untagle it. Oh and in 2008, fewer than 12% of degrees were awarded by women (3).

Oh, and let's not forget about the chips and boards these things run on. electronic engineers are as important as computer scientists or maybe even more important. So there are surely more, if not as many electronic engineers as computer scienti...

Source: https://www.geekwire.com/2014/analysis-examining-computer-science-education-explosion/

Source: https://www.geekwire.com/2014/analysis-examining-computer-science-education-explosion/

Well... uhh

V. The disaster of today

High-level programmers are now fighting over which language is the best for different applications, which one is the best to learn in the current year, which is easiest to learn, and which one pays the most. Of course because those people that make the languages really don't want their language to be useful. Have you heard about this new language called Kotlin? It is modern, safe, productive (not buzzwords), cross-platform, the syntax is similar to Java, the VM can run Java code and it is better than Java, how cool right? It is so good that we have a new language we need to make a completely new ecosystem around it (slightly less in the case of Kotlin but you get the point) but it is *better*! In case you didn't get my irony, I am mocking the redundancy that's also appeared.

I'm beggining to hate myself for writing this but I must do it in order to prove my point: Javascript libraries. Javascript was designed to be a malleable language and I'd say it succeded in that, but that doesn't mean it's for the good. Due to the fact it's malleable, it left a lot of extending of the language to the users of the language. The users aren't just individuals, they are also companies. And companies don't want to make teams with individuals or other companies so they create fragmentation over frameworks and libraries. And here we are: unable to easily make a modern website using vanilla Javascript, HTML and CSS, having to choose between 50 different libraries and even once you've chosen one it may become unmentained in a year because the company doesn't care anymore (AngularJs) and oh and you may even want to use a library to help you make those libraries faster or something (Astro). I've focused on web pages for Javascript, but Javascript is also used in servers but I'm not sure how that's like.

As we know, much fewer women are now getting Computer Science degrees but it appears that for some reason transgender women have an interest in Computer Science. If it is true that transgender women are women than we may be seeing a resurgence of women in programming and computer science. It also looks like they have a bias towords using Rust for low-level programming. Good? But I don't understand why they won't use anything else and get very defensive when you suggest other options.

We now also have people who literally cannot read what's on the screen and cannot operate even the most basic user interfaces. The solution is to dumb down this software that these people can't use but that also dumbs down the software for the people that could use it, thus hiding very useful options and making some tasks take longer for people who know how to read what's on the screen (Windows taskbar context menu).

And with all of this inefficiency I am worrying about something I never saw anyone have any worries about: the enviroment. We put a lot of blame on cars and factories for pollution but we completely forgot about computers and the power they draw. We have started to believe that it is entirely normal for a computer idling on Windows to draw 100W. We should acknowledge that it is not. What if Discord got rewritten in a low-level language, hunderds of thousands of computers would draw just a tiny bit less power but it will add up. So what if we did that for more software... quite a bit will add up. But unfortunately we live in the world I just described, and it's unlikely that's gonna happen unless something huge happens.

I highly recommend you read the following pages and pay close attention to the years.

Refrences and notes:

1. https://en.wikipedia.org/wiki/Computer_(occupation)

2. https://en.wikipedia.org/wiki/Colossus_computer#Operation

3. https://en.wikipedia.org/wiki/Gender_disparity_in_computing#Statistics_in_education

4. https://en.wikipedia.org/wiki/Home_computer

5. I have not been to a Computer Science university so I do not know exactly if that's what they teach. I assumed they teach Python and Javascript because those are the most often languages I hear when Computer Science is mentioned.

Further reading:

en.wikipedia.org

en.wikipedia.org

en.wikipedia.org

en.wikipedia.org

Discarded paragraph:

Aren't developers the only one who program? No. The term "developer" means (in computers) someone who can succeed in making the computer do something they wanted without taking into consideration the efficency, speed or optimization of the "making the computer do something". That means if a 4 year old child makes a really crappy game in Scratch, he is a developer. By definiton, that is all correct. But I don't think a 4 year old should recieve the same tag as a highly trained professional.

I. Before electronical computers

It was a job to be a computer. The first known written refrence dates from 1613. Often women were working this job. Women were working this job because the men were at war (1).

II. The beggining of electronic computers

The first electronic digital programmable computer was the Colossus computer. The Mk 1 was released on December 1943. This computer was operated by the Women's Royal Naval Service (Wren) and engineers who were on hand for meintenance and repair. By the end of the war the staffing was 272 Wrens and 27 men. (2)

This computer was not turing complete and it was not general-purpose and it used paper bands, but it was the first digital, fully electronic and with programability and it is interesting that women operated it. As a matter of fact, in 1984, 37.1% of computer science degrees were awarded to women (3).

II. Personal computers

Personal computers became popular in 1980(4). Not very many people (who weren't studying computers) were writing software and that is because the choice of easy to use/learn programming languages was small (Basic, Fortan, etc.) and you couldn't do a whole lot with them, so most software was written in low-level which is difficult to learn for a person who doesn't have a teacher because online tutorials and resources (forums like stackoverflow, github or online docs) weren't available like today (for example if you don't understand something from a Basic book you have no one to ask). In these years, people mostly consumed software developed by teams of programmers or by highly invested individuals.

But as the internet goes public (1993) and computers became more powerful we come to the next point...

III. First mainstreaming of programming

I believe what caused the downfall were high level programming languages. Because high level languages made it very easy to create apps, people who may have opted for something more low-level and more efficient opt instead for a high level language because it is easier. This might sound good, right? Because more people get into programming and everyone makes apps. But I believe this is poison. If everyone learns only high level programming languages, who will be mentaining the language itself? We won't get to the level where absolutely nobody knows low-level languages, but we're already seeing the effects of less people learning low-level languages: slow and buggy software, needing to upgrade CPU or GPU to run basic software (for example Windows 11 and Discord) and many many more people getting into Computer Science where they're learning Python or Javascript (5). And also, at this point (1998) the percentage of women who were awarded Computer Science degrees is 26.7% (3).

I also mentioned the internet. I believe web pages languages (HTML, CSS, JS) were made without much thought of usabilty, code design, performance and coeherence. They were developed by different people and somehow brought togheter with some standards. All that web browser developers did was to follow those standards without thinking if they're any good. Web page languages forced everyone who wants to make web pages (or apps, more recently) to use high level languages and it truly made making apps very easy for a usual hobbyist because of online resources (of course not to the same level as today but still), forums, communities and lower barrier of entry (literally just a text editor). So this leads us to the next point...

IV. Mainstreaming among programming everyone

The number of developers is increasing fast. Fast and with dollar signs in their eyes. Developers for high-level programming languages are in high demand because they're the fastest, most available and in turn make the most money. Low-level language programmers may choose to leave their current job because they could program in high-level languages and get paid much more. People who previously had no interest in computers now suddenly do because the salary is so big. These people make software (usually web pages or servers) with no passion or any interest other than money and as such they create Soulless Software. Unmaintainable, unreadable, inefficient code which works for one purpose and has to be completely rewritten when the person who wrote it leaves because nobody can untagle it. Oh and in 2008, fewer than 12% of degrees were awarded by women (3).

Oh, and let's not forget about the chips and boards these things run on. electronic engineers are as important as computer scientists or maybe even more important. So there are surely more, if not as many electronic engineers as computer scienti...

Well... uhh

V. The disaster of today

High-level programmers are now fighting over which language is the best for different applications, which one is the best to learn in the current year, which is easiest to learn, and which one pays the most. Of course because those people that make the languages really don't want their language to be useful. Have you heard about this new language called Kotlin? It is modern, safe, productive (not buzzwords), cross-platform, the syntax is similar to Java, the VM can run Java code and it is better than Java, how cool right? It is so good that we have a new language we need to make a completely new ecosystem around it (slightly less in the case of Kotlin but you get the point) but it is *better*! In case you didn't get my irony, I am mocking the redundancy that's also appeared.

I'm beggining to hate myself for writing this but I must do it in order to prove my point: Javascript libraries. Javascript was designed to be a malleable language and I'd say it succeded in that, but that doesn't mean it's for the good. Due to the fact it's malleable, it left a lot of extending of the language to the users of the language. The users aren't just individuals, they are also companies. And companies don't want to make teams with individuals or other companies so they create fragmentation over frameworks and libraries. And here we are: unable to easily make a modern website using vanilla Javascript, HTML and CSS, having to choose between 50 different libraries and even once you've chosen one it may become unmentained in a year because the company doesn't care anymore (AngularJs) and oh and you may even want to use a library to help you make those libraries faster or something (Astro). I've focused on web pages for Javascript, but Javascript is also used in servers but I'm not sure how that's like.

As we know, much fewer women are now getting Computer Science degrees but it appears that for some reason transgender women have an interest in Computer Science. If it is true that transgender women are women than we may be seeing a resurgence of women in programming and computer science. It also looks like they have a bias towords using Rust for low-level programming. Good? But I don't understand why they won't use anything else and get very defensive when you suggest other options.

We now also have people who literally cannot read what's on the screen and cannot operate even the most basic user interfaces. The solution is to dumb down this software that these people can't use but that also dumbs down the software for the people that could use it, thus hiding very useful options and making some tasks take longer for people who know how to read what's on the screen (Windows taskbar context menu).

And with all of this inefficiency I am worrying about something I never saw anyone have any worries about: the enviroment. We put a lot of blame on cars and factories for pollution but we completely forgot about computers and the power they draw. We have started to believe that it is entirely normal for a computer idling on Windows to draw 100W. We should acknowledge that it is not. What if Discord got rewritten in a low-level language, hunderds of thousands of computers would draw just a tiny bit less power but it will add up. So what if we did that for more software... quite a bit will add up. But unfortunately we live in the world I just described, and it's unlikely that's gonna happen unless something huge happens.

I highly recommend you read the following pages and pay close attention to the years.

Refrences and notes:

1. https://en.wikipedia.org/wiki/Computer_(occupation)

2. https://en.wikipedia.org/wiki/Colossus_computer#Operation

3. https://en.wikipedia.org/wiki/Gender_disparity_in_computing#Statistics_in_education

4. https://en.wikipedia.org/wiki/Home_computer

5. I have not been to a Computer Science university so I do not know exactly if that's what they teach. I assumed they teach Python and Javascript because those are the most often languages I hear when Computer Science is mentioned.

Further reading:

BASIC - Wikipedia

en.wikipedia.org

en.wikipedia.org

Women in computing - Wikipedia

en.wikipedia.org

en.wikipedia.org

Discarded paragraph:

Aren't developers the only one who program? No. The term "developer" means (in computers) someone who can succeed in making the computer do something they wanted without taking into consideration the efficency, speed or optimization of the "making the computer do something". That means if a 4 year old child makes a really crappy game in Scratch, he is a developer. By definiton, that is all correct. But I don't think a 4 year old should recieve the same tag as a highly trained professional.